Machdyne makes open-source FPGA computers. In this post we’ll explain what an FPGA computer is, what they can be used for, and why we’re bothering to make them.

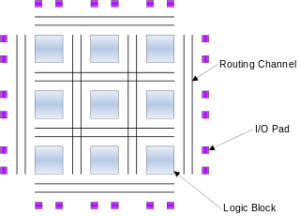

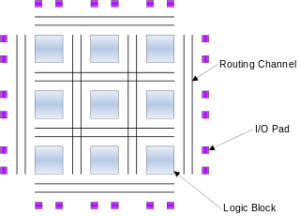

An FPGA (field-programmable gate array) is a chip that contains an array of programmable logic blocks. Together, these logic blocks can be configured to act as various digital circuits. FPGAs often have thousands of logic blocks that allow them to act as complex circuits, such as a CPU and a chipset, also known as a System-on-a-Chip (SoC).

An FPGA (field-programmable gate array) is a chip that contains an array of programmable logic blocks. Together, these logic blocks can be configured to act as various digital circuits. FPGAs often have thousands of logic blocks that allow them to act as complex circuits, such as a CPU and a chipset, also known as a System-on-a-Chip (SoC).

FPGA computers can become completely different systems by simply updating their configuration memory with different “gateware”. For example, an FPGA computer could be configured as a 64-bit RISC-V SoC running Linux one minute, and a NES with an 8-bit 6502 CPU running Super Mario Bros the next minute.

Some use cases for FPGA computers include:

- General purpose computing.

- Timeless computing.

- Retro computing and retro gaming.

- Custom CPU and SoC development.

You might say – hey I can already do all of that on my regular computer. It’s true, and you can do general purpose computing much faster on your everyday computer than with an FPGA computer.

So why are we bothering to make FPGA computers? While we are interested in advancing all of the above use cases, our primary focus is on what we call timeless computing – the use of computers to run timeless applications. Our vision is to create a stable, secure, responsive computing environment for the most important timeless applications (reading, writing, math, education, organization, communication, automation, etc.)

You can think of this as a supplemental computer that you would only use for certain tasks, like writing a book or learning something new, but probably not for paying your taxes or browsing the web.

These computers will not replace your “daily driver” but they will provide a simple environment without many of the distractions and annoyances found with modern computers. And because they are simple, understandable and completely open-source, they have the potential to provide a level of privacy and security not possible with most modern computers.

In order to create such an environment we’re pursuing two simultaneous approaches for the gateware and software:

- Kakao Linux – This is a Linux distribution that runs on top of a RISC-V SoC that is optimized for running Linux on our hardware. This works today and you can read about what’s already possible in our post on practical timeless computing.

Zucker Zeitlos SOC/OS – This is a custom RISC-V SoC and OS designed for our hardware. Over the coming years we intend to create a completely new computing experience, with a new graphical operating system and new applications developed in tandem with our SoC and specifically for our hardware. The result will be something like what computing might look like in a parallel universe where everything progressed in a slightly different, and maybe better way.

While our computer hardware is still evolving, it was all created with our long-term goals in mind, and we intend to support all of our hardware into the future. You can confidently buy any of our computers today knowing that they have the ability to gain new and improved functionality in the future. And because our hardware, software and the toolchains they use are open-source, you can modify our computers however you want and even create your own CPU, SoC and software.

Thanks for reading. We want the FPGA computer to be an elegant tool for a more civilized age that’s yet to come, and you’re invited to join us on the journey to bring them into existence. You can follow us on X and GitHub for the latest updates.

Survival is the ability to exist in the future.

Survival is the ability to exist in the future.

There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction.

There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction. In order to make our

In order to make our  For quite a while now we’ve wanted to set up a forum for our customers to communicate and share ideas with each other. We tried a few different things but nothing felt quite right. We’re excited to announce a new experiment that we hope our community will find useful: Machdyne

For quite a while now we’ve wanted to set up a forum for our customers to communicate and share ideas with each other. We tried a few different things but nothing felt quite right. We’re excited to announce a new experiment that we hope our community will find useful: Machdyne  In order to make our products more available and affordable we’ve begun to experiment with outsourcing the assembly of certain PCBs.

In order to make our products more available and affordable we’ve begun to experiment with outsourcing the assembly of certain PCBs. One of the goals of Machdyne is to create

One of the goals of Machdyne is to create  We think that

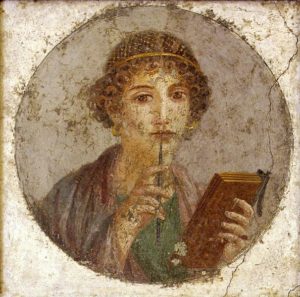

We think that  Deep thinking often requires writing. The writing down of thoughts can be affected by perceived privacy. Thus, the erosion of perceived privacy may reduce the capacity for deep thought.

Deep thinking often requires writing. The writing down of thoughts can be affected by perceived privacy. Thus, the erosion of perceived privacy may reduce the capacity for deep thought. An FPGA (field-programmable gate array) is a chip that contains an array of programmable logic blocks. Together, these logic blocks can be configured to act as various digital circuits. FPGAs often have thousands of logic blocks that allow them to act as complex circuits, such as a CPU and a chipset, also known as a System-on-a-Chip (SoC).

An FPGA (field-programmable gate array) is a chip that contains an array of programmable logic blocks. Together, these logic blocks can be configured to act as various digital circuits. FPGAs often have thousands of logic blocks that allow them to act as complex circuits, such as a CPU and a chipset, also known as a System-on-a-Chip (SoC). We decided earlier this year to temporarily slow down PCB design and production in order to build a pick-and-place machine. Manual PCB assembly can be a tedious, repetitive, time-consuming and sometimes stressful process and we were spending most of our time assembling boards. We determined that at least partially automating the assembly process was the only sustainable way to continue producing products ourselves.

We decided earlier this year to temporarily slow down PCB design and production in order to build a pick-and-place machine. Manual PCB assembly can be a tedious, repetitive, time-consuming and sometimes stressful process and we were spending most of our time assembling boards. We determined that at least partially automating the assembly process was the only sustainable way to continue producing products ourselves. Machdyne began in 2021 as the experimental hardware division of

Machdyne began in 2021 as the experimental hardware division of