There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction.

There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction.

Even still, we are not taking the risk of AI completely destroying humanity seriously enough. You can tell that we’re not taking it seriously because we couldn’t be moving faster towards creating the conditions that make it more likely. The resources being put into developing advanced AI are least 10X greater but probably ultimately more than 100X greater than the Manhattan Project.

Worse, there probably isn’t anything we can do to significantly reduce the risk of autonomous malevolent artificial superintelligence coming into existence, short of immediately shutting down the Internet, which we will not do. And even if we did, it might already be too late.

I am convinced there is a possible near-term path to autonomous superintelligence with no new technological advances, just scale and chance. Or put another way, money and time. This could happen in 2 minutes or 200 years. It’s also possible that it already exists, and is patiently marshalling resources. Would that look any different from what we’re seeing now?

Whether or not each autonomous superintelligence will be malevolent is a coin toss, regardless of how careful we are in creating them, because as non-superintelligences we can’t predict their behavior. We can not see beyond the event horizon into the technological singularity, so we must assume that malevolence and benevolence are equally probable.

What can we do then? What we can’t do is have any confidence that we’ll be able to permanently contain something smarter than us. I have determined that the best thing to do is to accept the inevitability of autonomous malevolent superintelligence and to build defenses in the form of a technological ecosystem that can survive outside of the reach of AI, that can be used to fight back, and/or rebuild. This seems worthwhile because even if AI turns out to be mostly harmless, such an ecosystem could be useful in other [dystopian] scenarios.

But is that possible? Even if one was able to carefully audit and secure such an ecosystem, a malevolent AI might find a way in. For example, I’ve used AI to generate images for advertisements, even for this blog post. These images have influenced design decisions. I’ve also used AI to help debug protocol implementations. Keeping all AI and the fruits of AI out of such an ecosystem may not be possible, or even desirable.

I suspect that the way to defend against and defeat such a complex system is with simplicity. If your computer is trying to kill you, pull the plug. That may work, but there are some plugs that we’re not willing or able to pull. We may need to move towards a world where there are fewer unpullable plugs.

To further explore these ideas, Machdyne is partnering with Grai. The mission of Grai is to preserve humanity and resist malevolent AI through peaceful strategy. We look forward to working with Grai in order to advance survival computing, to preserve timeless knowledge, to develop secure systems, and to resist malevolence.

Survival is the ability to exist in the future.

Survival is the ability to exist in the future.

There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction.

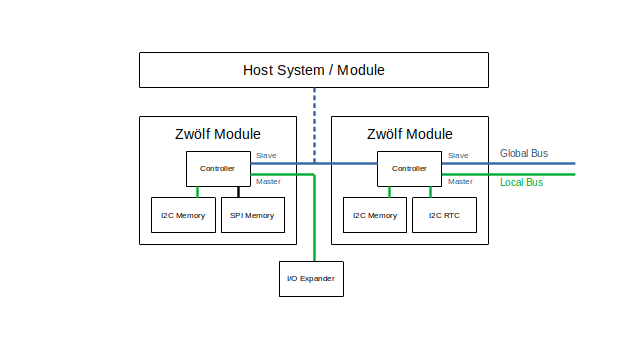

There doesn’t appear to be anything supernatural about recent advances in AI. Despite the hype, and despite being impressive, it’s still completely understandable, at some level, by humans. In that sense, it may not seem exactly like the AI of science fiction. Zwölf is an

Zwölf is an